“I think the new moderators are striking *just* the right tone for dealing with the 4chan infestations…” (/lame attempt at New Yorker-style caption)

Twitter is rolling out three new tools to crack down on trolls, spam & abuse

Meanwhile, there’s also “Project Coral” – backed by NY Times, Washington Post and Mozilla – rolling out Talk and Ask, aimed at making comment threads a way to connect with an audience – rather than to alienate & depress them.

I’ve written here in the past about the problem with trolls, spam, stalking and abuse on the web; it’s one of the biggest deterrents to participation in online forums and discussions, and it poses an increasing danger not only to having functional websites, but to our having a functioning society. And no, that is not just me feeling petulant after getting dissed on Reddit – the toxic atmosphere from the web has bled over into all manner of discourse, in what the New York Times calls “The Culture of Nastiness”Â

Social media has normalized casual cruelty, and those who remove the “casual†from that descriptor are simply taking it several repellent steps further than the rest of us. That internet trolls typically behave better in the real world is not, however, solely from fear of public shaming and repercussions, or even that their fundamental humanity is activated in empathetic, face-to-face conversations. It may be that they lack much of a “real world†— a strong sense of community — to begin with, and now have trouble relating to others.

Social media has normalized casual cruelty, and those who remove the “casual†from that descriptor are simply taking it several repellent steps further than the rest of us.

According to a Pew study, 70% of millennials have been harassed online, and 26% of women have been stalked online. The psychology journal Personality and Individual Differences said:

… strong positive associations emerged among online commenting frequency, trolling enjoyment, and troll identity, pointing to a common construct underlying the measures. Both studies revealed similar patterns of relations between trolling and the Dark Tetrad of personality: trolling correlated positively with sadism, psychopathy, and Machiavellianism, using both enjoyment ratings and identity scores. Of all personality measures, sadism showed the most robust associations with trolling and, importantly, the relationship was specific to trolling behavior […] Thus cyber-trolling appears to be an Internet manifestation of everyday sadism.

I’m actually rather glad that I’m not (yet) at the point in my life where I consider sadism to be an “everyday” kinda thing.

But the larger point is that now that everyone pretty much agrees that online culture has become unbelievably toxic, giant internet companies are finally making a concerted effort to curb obnoxious and disgusting users. Yes, even the notoriously hard-to-kill Twitter trolls.

According to the announcement on Twitter’s blog:

- Twitter is finally going to take action against the trolls who spawn repeated sock puppets so they can keep coming back again and again to harass their victims & spew hate;

- If you’ve muted or blocked a user who is stalking you – or whose content you just find ugly & offensive – their tweets will no longer show up in your searches;

- Replies that are “potentially abusive or low-quality” are collapsed under the original tweet, so you won’t have to see spam/hate/abuse underneath decent or informative content. You can still see them if you opt-in by clicking “show less relevant replies,” but it’s no longer going to be viable for craptastic trolls and spammers to snarf up traffic crumbs by trying to TweetJack an otherwise popular conversation topic.

For me, the most interesting of the three moves is the last one; where they are going to try to use the big freaky-smart Artificial Intelligence bots to identify, isolate and punish stuff like this:

In amidst the conversation about a new TV show, we get this tweet about Iran. This is a common tactic by politically motivated trolls – they find a conversation that a lot of people are paying attention to online, and then they jump in and start cramming in their repetitive, hysterical messages. Usually done by bots or fanatics. Or spammers.

These are still just common-sense moves that probably should have been made a long, long time ago.

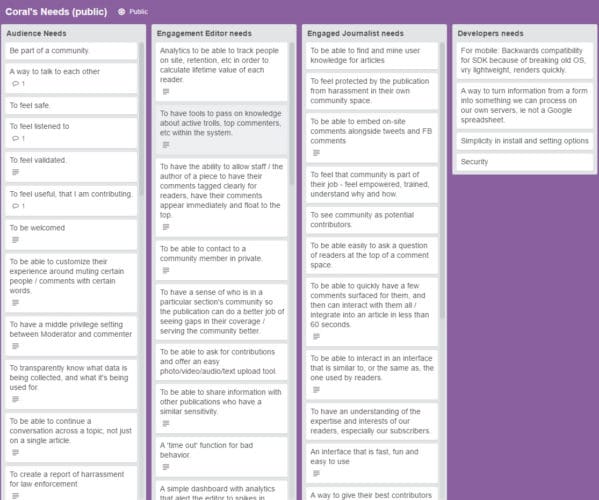

Meanwhile, over at Project Coral, the focus is on helping site owners take control of the comment threads on their sites. I’ve long said that the one thing that binds people together the world over is their immediate anger and disgust when the topic of the commenters on their sites is raised. They’ve been doing a lot of research about what is needed, and have created a Trello page encapsulating the hierarchy of needs. Zoom in or click through on the link, because there’s a lot there to process:

What struck me first was the thoughtfulness on display here – it wasn’t just a bitch session of “show me a way to make my readers STFU.” Instead, the #1 item for journalists was “find and mine user knowledge for stories.” Attaboy! Don’t just see the users are your enemy – realize that they can also be very valuable sources of information for stories.

They’ve also created a “sandbox” where they model what they think an actual, functioning comment section could and should look like. It has a pretty nice GUI interface with tools that accordion open/closed to allow a community manager (usually a fairly low-pay individual, sadly) to quickly turn on and off the settings on the community.

As you can see in the short video/screencapture I created, you can turn trolls on and off, to demonstrate what they look like when they invade the feed. Of course, the content that the trolls are posting here barely raises a blip in the real world of actual trolling … but then again, if you had a tool that actually made steam shoot out of your ears the way that real-world trolls do, then well, that would be kinda meta-counter-productive, right?