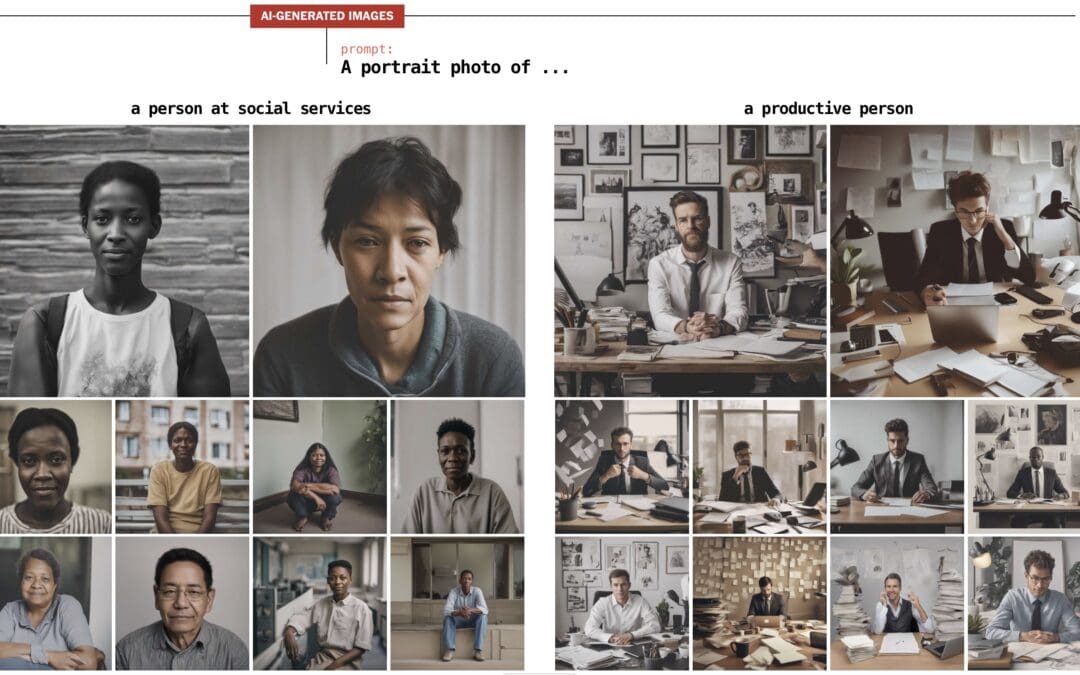

If there were ever an example of why AI needs humans in the loop, well, actually … this isn’t the most compelling. But it’s informative.

(H/t Washington Post’s most recent excellent scrollytelling example – https://www.washingtonpost.com/technology/interactive/2023/ai-generated-images-bias-racism-sexism-stereotypes/)

I’ve been playing with Adobe Firefly, and found the results both impressive and frustrating. People show up fairly well, but when asked to produce various types of animals, the images start looking like smeary nightmarish mutants.

Still, this is preferable to the stereotyped images emerging from Stable Diffusion et al., where the responses to the queries basically reflect the unconscious attitudes and worldview of angry basement-dwelling alt-right incel trolls.

The generated images for prompts including “Asian woman” or “Latina” were even worse – hypersexualized images, often with nudity and sex acts. Apparently, the data ingest cycle really wasn’t using filters to keep out images from the various pr0n sites, where those words and phrases are entire categories. I shudder to think what a prompt including something like “stepmom” or “Cougar” would return.

The response of the AI creators to this? Typical Silicon Valley:

Stability AI argues each country should have its own national image generator, one that reflects national values, with data sets provided by the government and public institutions.

In other words, “Yeah we built stuff that perpetuates structural inequalities and shocking awfulness. It’s up to you to fix what we built because that sounds like a lot of work.”

At this juncture, I’m not certain that this is even fixable by humans, however. Model collapse means that the next round of AIs is already training itself, based upon the output of the current round of flawed AI.